Building Trust Amid Change: Lessons from scholarly publishing in 2025

Every few years, scholarly publishing experiences a moment that quietly but decisively reshapes its future. 2025 marked an onset to that moment, marked by through a series of developments that collectively forced the publishing ecosystem to pause, reflect, and recalibrate.

As this year draws to a close, as 2025 draws to a close, it is clear that scholarly publishing has spent the year doing more than reacting to change. Across journals, platforms, institutions, and professional bodies, the past twelve months were marked by a steady process of consolidation, where ideas debated in earlier years began to take structural shape in everyday practice.

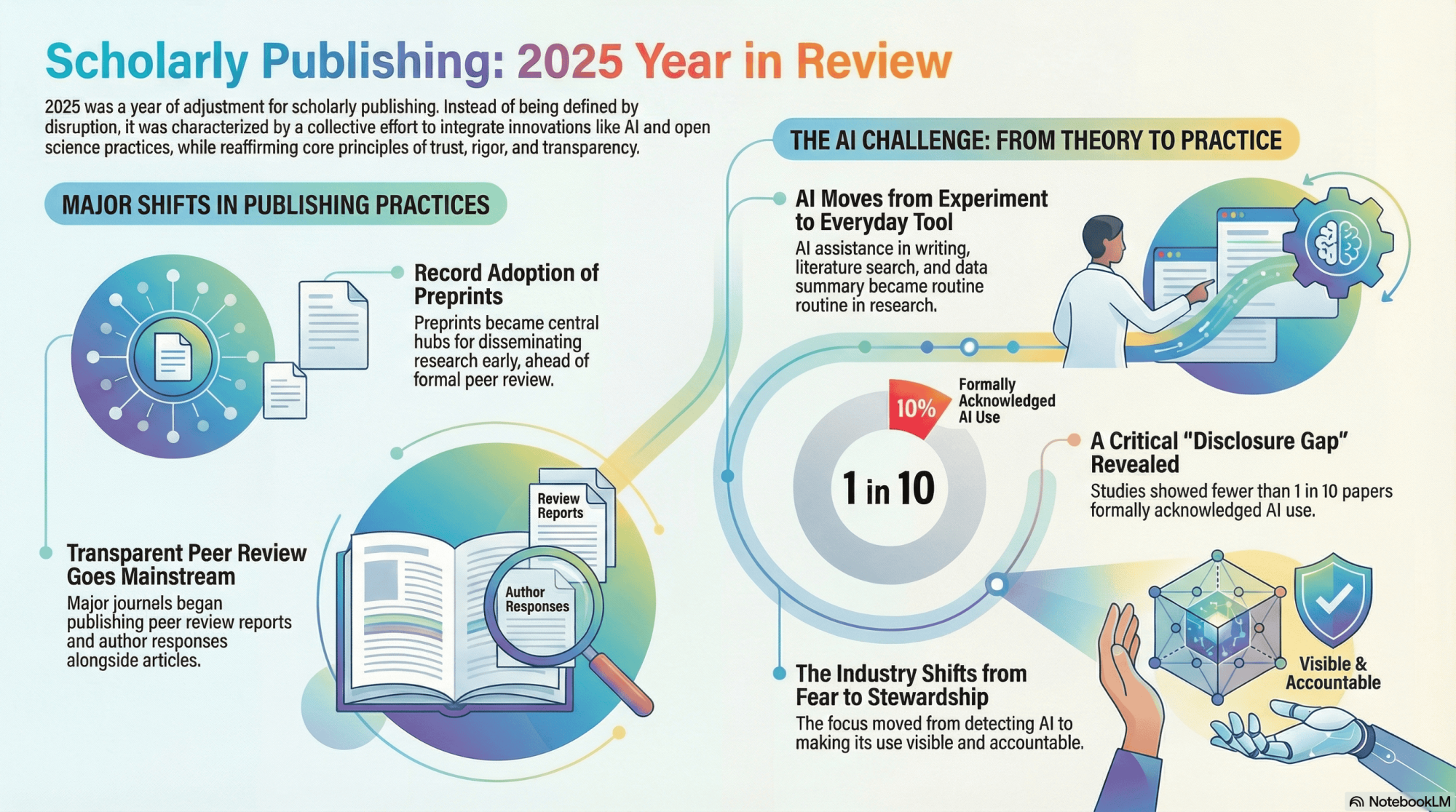

The year brought visible milestones: Unprecedented growth in preprint adoption, expanded commitments to transparency in peer review, and more explicit ethical guidance addressing both technological and non-technological pressures on research communication. At the same time, artificial intelligence moved from experimental use to routine presence in academic workflows, prompting publishers and institutions to shift focus from abstract policy debates to practical questions of disclosure, accountability, and governance.

Taken together, these developments tell a broader story about the state of scholarly publishing in 2025. Rather than a period defined by disruption alone, it was a year characterized by adjustment and collective effort to integrate innovation while reaffirming the principles of trust, rigor, and openness that sustain the scholarly record.

This year-end reflection traces the key developments that shaped that journey and the lessons they leave as the community looks ahead.

Key Developments of 2025

1. Record Adoption of Preprints and Expanded Roles

One of the most striking metrics of 2025 was the continued growth of preprint platforms as central hubs in research dissemination. Preprints.org, one of the major multidisciplinary preprint servers, surpassed 100,000 posted preprints, a milestone reflecting accelerating adoption by authors worldwide and the platform’s expanding role in open science. It emphasizes the importance of early dissemination ahead of formal peer review.

Furthermore, the trend testifies how preprints have become not just a supplement to journal publication, but a key pillar of contemporary scholarly communication for improving visibility, enabling early feedback, and bridging early findings with formal publication pathways.

2. Peer Review Gets More Transparent

Another major evolution in 2025 was the growing institutionalization of transparent peer review. In a significant move, Nature standardized transparent peer review for all newly published research papers. Under this new approach, peer review reports and author responses are published alongside articles, building on Nature’s earlier optional trials and aligning with broader industry calls for visibility into editorial decision-making. Reviewer identities remain anonymous unless they choose otherwise, preserving confidentiality while enhancing transparency.

3. AI Assistance—From Experimentation to Everyday Practice

2025 marked a shift beyond preliminary discussions on the use and novelty of AI-assisted writing and analysis. Researchers across disciplines were already using them for language refinement, literature exploration, data summarization, and first-draft generation. Editorial teams began to encounter submissions where AI assistance was assumed rather than exceptional. This normalization shifted the conversation decisively. The question was no longer whether AI should be used in research communication, but how its use should be governed, documented, and evaluated.

This shift drove policy activity across the publishing landscape. Publisher guidelines that once relied on broad prohibitions or vague recommendations began to evolve toward more precise language. The STM Association’s classification of AI use in manuscript preparation marked an important step toward shared terminology. It set an essential foundation for consistent disclosure and enforcement across journals and platforms. Furthermore, Enago initiated the Responsible AI movement for promoting transparent and ethical AI usage and collaborating with the stakeholders to establish standardized AI usage guidelines for educating researchers on best practices.

4. Evidence Exposes the Disclosure Gap

As AI adoption increased, empirical research added urgency to the ongoing discussions. Cross-sectional studies and preprints revealed a striking gap between AI usage and formal disclosure. In many cases, fewer than one in ten papers explicitly acknowledged AI assistance, even in journals with stated policies.

These findings did more than confirming suspicions. They reframed AI governance as an evidence-based challenge rather than a hypothetical risk. Disclosure was not failing due to reluctance alone, but because guidance remained fragmented, inconsistent, and poorly operationalized at the submission stage.

5. Integrity Under Pressure

Midway through the year, several high-profile integrity incidents brought AI risks into sharper focus. Publications containing fabricated or unverifiable references, widely attributed to uncritical use of generative tools triggered retractions and public debate. What unsettled editors was not the presence of AI, but the ease with which errors bypassed existing safeguards.

Discussions on industry-wide platforms emphasized a shared conclusion: traditional editorial workflows were not designed for probabilistic text generation. Peer review, long optimized for evaluating human-authored scholarship, required adaptation and reinforcement through clearer provenance checks and accountability systems.

Disclosure: The above image was generated using NotebookLM and is intended solely for understanding and illustrative purposes.

Perhaps the most underappreciated shift of 2025 was the growing recognition that ethics frameworks alone are insufficient without education. Through participation in global forums such as SSP and by hosting 20+ institutional webinars and workshops across 2025, Enago contributed to practical understanding in areas ranging from academic writing and AI literacy to academic integrity and disclosure practices.

By the close of the year, the contours of a more mature AI discourse had emerged. The industry moved away from fear-driven narratives toward stewardship. The focus shifted from detecting AI use to making it visible and accountable. Importantly, ethical AI was no longer framed as a constraint on innovation, but as a prerequisite for maintaining trust in scholarly outputs.

Enago in 2025: Two decades of partnership, practice, and responsible progress

2025 marked a significant moment for Enago itself. With 20 years in the scholarly publishing ecosystem, we reached a milestone that mirrors the broader evolution of research communication, from science communication and publication support to deeper engagement with research integrity, publishing ethics, and responsible technology adoption.

Responsible and Ethical AI: From Principles to Practice

Central to Enago’s work in 2025 was the continued expansion of its Responsible AI initiatives. As conversations around AI matured across the industry, Enago positioned its efforts around a practical objective: enabling researchers to use AI transparently, ethically, and with clear accountability rather than treating AI as either a shortcut or a risk to be avoided.

Through the Responsible AI movement, Enago actively engaged with researchers, editors, and institutional stakeholders to promote clarity around disclosure, authorship responsibility, and human-in-the-loop practices. These initiatives complemented broader industry guidance from organizations such as STM and COPE, translating high-level principles into applied understanding for day-to-day research workflows.

Contributing to a Global Peer Review Week Conversation

Peer Review Week (PRW) 2025 provided a standout moment in Enago’s year of engagement, offering an opportunity to elevate the conversation about AI’s role in peer review. As part of the global PRW activities, Enago hosted a well-attended panel discussion titled “Enhancing Peer Review with AI: Where Should We Draw the Line?” that brought together leading thinkers in research integrity and publishing practice. The panel featured a distinguished group of experts, like Dr. Elisabeth Bik, a renowned science integrity consultant, Daniel Ucko, Head of Ethics and Research Integrity at APS, Patrick Starke, SEO and co-founder of ImageTwin and Eliott Lumb, co-founder of Signals to complement the conversation with practical considerations around AI and peer review. These engagements reinforced a key message echoed across PRW 2025: that the future of peer review depends not on replacing human judgment, but on strengthening it through responsible augmentation and clearer governance.

Research & Beyond: Extending support beyond manuscripts

In 2025, Enago also strengthened its engagement with the research community through Research & Beyond, its flagship podcast series. Across episodes released during the year, we brought together diverse voices to discuss topics such as academic integrity, evolving expectations in scholarly communication, responsible AI use, peer review practices, and researcher development. With guests like Randy Townsend, Dr. Dave Flanagan, Kristen Ratan, and Dr. Fiona Hutton, among others, each interaction created space for nuanced dialogue around issues that the industry is actively facing, but that are not always captured in formal guidelines.

Looking Ahead

By the end of 2025, the contours of a more settled and pragmatic publishing landscape had begun to emerge. What stands out in retrospect is not a single defining event, but the cumulative effect of many measured decisions. Policies are more refined, infrastructures are set to adapt, and communities have invested time in education and capacity building. These incremental steps, taken together, reflect an industry learning how to govern change rather than simply respond to it.

As scholarly publishing moves into 2026, the challenges ahead are well understood: translating shared definitions into interoperable workflows, embedding transparency into systems rather than statements, and extending training beyond authors to editors, reviewers, and institutional leaders. Yet the progress of 2025 suggests a growing confidence in addressing these challenges collectively.

If this year demonstrated anything, it is that responsible progress in scholarly publishing is rarely abrupt. It is built through alignment, dialogue, and sustained effort that increasingly define how the community approaches the future of research communication.