How Technical Checks Are Evolving with AI and Why Universities Need Expert Intervention More Than Ever

In the wake of AI-related plagiarism scandals at leading institutions, university publication departments are facing mounting increasing scrutiny. The pressure is on to safeguard ethical integrity while maintaining efficiency and quality in academic publishing. As artificial intelligence (AI) tools become increasingly embedded in scholarly workflows, universities must rethink their approach to pre-submission technical checks. The solution lies not in choosing between automation or human oversight—but in combining both.

AI’s Growing Role in Manuscript Preparation

Over the past two years, there has been a marked shift in how researchers approach manuscript preparation. From drafting introductions to rewriting abstracts to grammar corrections, AI tools are now being used at every stage of the academic writing process. Tools like ChatGPT, Grammarly, and others have become common—even essential—across disciplines.

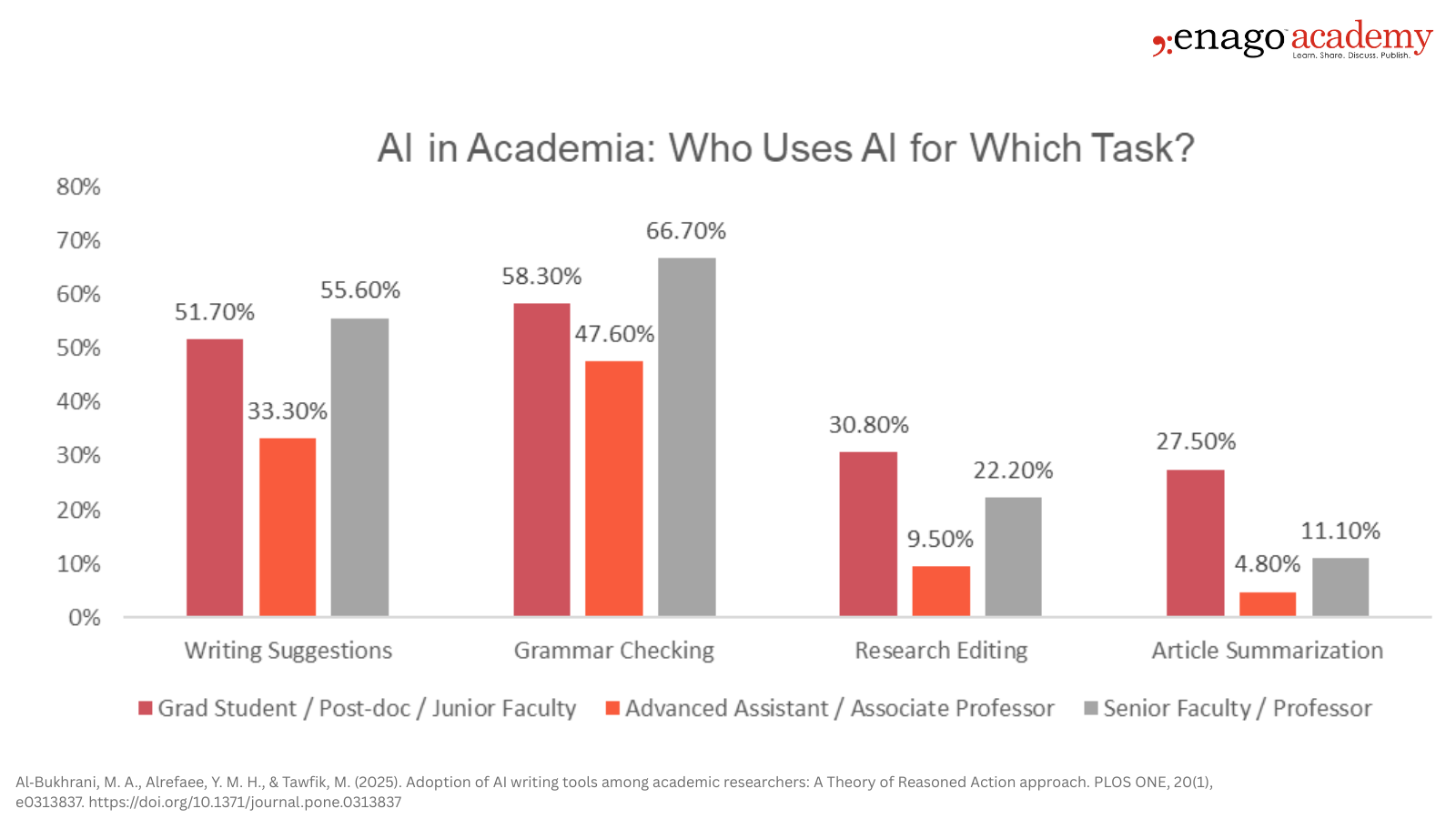

However, the adoption of AI is not uniform. Early-career researchers, such as PhD students and postdoctoral fellows, experiment with AI for a diverse task, including brainstorming research questions and formatting references. Senior academics, on the other hand, typically engage with AI for more targeted purposes, such as enhancing language clarity or screening for plagiarism before submission.

The Risks of Over-Reliance

Despite its advantages, over-reliance on AI can have serious repercussions. A 2024 Stanford University report shed light on several cases where automated AI detectors falsely flagged international students for plagiarism. These false positives led to academic penalties and significant reputational damage, exposing major flaws in AI-only screening.

In classrooms increasingly relying on AI detection tools, false accusations of academic dishonesty are becoming alarmingly common. At Johns Hopkins, Professor Taylor Hahn noticed international students’ work was disproportionately flagged as AI-generated, despite clear evidence of original work. Similarly, a non-native English speaker at Cardozo School of Law had her essay flagged, raising concerns for students. Leigh Burrell from the University of Houston-Downtown proved her innocence with editing history after her paper was wrongly accused—highlighting the broader issue of false positives. In response, institutions like the University of Pittsburgh have disabled AI writing indicators, underscoring the urgent need to balance academic integrity with fairness for all students.

Technical check systems powered purely by AI are still unable to interpret nuance—context, author intent, and ethical compliance remain beyond their capabilities. For instance, AI might flag self-citations or standard methods as plagiarism or fail to differentiate between appropriate image reuse and duplication fraud.

AI can only assess what it has been trained on. It doesn’t understand evolving journal requirements, discipline-specific formatting norms, or subtle ethical breaches. While fast, its output is often generic and misses the layered criteria of peer-reviewed publishing.

Understanding the Stakeholders: Who’s at Risk?

University publication offices, ethics committees, and academic leaders are all under pressure of growing gaps in research quality and publication ethics. Their goals are clear: reduce retractions, minimize desk rejections, and improve acceptance rates in high-impact journals. Yet achieving these outcomes is becoming increasingly difficult.

Limited editorial staff and a surge in manuscript submissions have stretched internal resources thin. At the same time, expectations from global journals continue to rise, leaving institutions struggling to keep pace. While outsourcing editorial support seems like a practical solution, it comes with its own set of concerns—especially around costs, turnaround times, and the security of sensitive data.

In this high-stakes environment, the temptation to rely solely on automation is understandable. But without the right balance of human oversight, such an approach can backfire—compromising the very goals institutions are working to uphold credibility, compliance, and quality.

Recent insights underscore the dual-edged nature of AI in academic environments. As highlighted by Wiley’s 2024 survey of instructors and students, there is widespread concern that AI may threaten academic integrity, with nearly all instructors (96%) believing at least some of their students cheated over the past year—a sharp increase from previous years.

Wiley’s group vice president Lyssa Vanderbeek notes, “While students and instructors have a general belief that AI will be used in ways that are detrimental to academic integrity, they also seem to sense that AI can benefit learning when used the right way.”

This sentiment is echoed across university publication departments that are feeling this tension, striving to uphold ethical standards while remaining efficient. However, what remains unaddressed is the fact that most universities still lack formal policies guiding AI use in publication preparation and technical checks, leaving a critical gap in how institutions navigate these evolving technological challenges.

Why Human Expertise Matters?

While AI tools have come a long way in streamlining technical checks, human expertise remains irreplaceable. What algorithms can flag, only skilled editors and reviewers can truly interpret in context. Take, for example, the reuse of method sections across multiple papers—something AI might automatically flag as duplication. An experienced reviewer, however, can distinguish between ethical reuse and actual plagiarism.

The same applies to image analysis. Tools may highlight similarities in microscopy or gel images, but only a human can assess whether those similarities are due to legitimate serial experiments or questionable practices. More complex issues—like detecting subtle signs of data fabrication, resolving authorship disputes, or ensuring adherence to evolving publication guidelines—require not just experience, but deep disciplinary insight.

Expert editors also bring an understanding of journal-specific and funder-specific expectations that AI simply can’t replicate. Whether it’s tailoring submissions for medical journals or aligning with the reporting requirements of engineering funders, their role is critical in ensuring that manuscripts are truly submission ready.

The Hybrid Approach: AI + Editorial Expertise

To meet today’s publishing challenges, we must try turning to hybrid solutions—ones that combine the speed of AI with the nuance of human judgment. It’s not about choosing between automation and expertise, but about using both in tandem to meet growing demands for quality and integrity. By integrating AI tools for pre-screening and initial checks, institutions can accelerate workflows and reduce the burden on internal teams. But it’s the addition of expert editorial review that ensures flagged issues are accurately interpreted and responsibly addressed. From providing detailed compliance feedback to aligning with institutional policies, this hybrid model delivers both scale and depth.

Such an approach also supports long-term capacity building. Professional partners like Enago can help train in-house staff, develop responsible AI use policies, and foster a culture of quality that extends beyond any single manuscript.

A Trusted Partner for Research Integrity

At Enago, we recognize the complexity of publishing in the age of AI. Our editorial solutions are built to work alongside automation—not replace it—ensuring that institutions get the best of both worlds.

We combine AI-driven plagiarism and image duplication checks with thorough manual verification. Our editors are trained to align reviews with the latest journal and funder standards, offering feedback that is both technically sound and contextually informed. From manuscript clarity to ethical compliance, our reports help researchers at all levels enhance their submissions. Whether you’re managing hundreds of submissions or just looking to reduce retractions, Enago offers a partnership rooted in trust, rigor, and results.

By embedding expert insight into AI-enabled processes, we help universities protect their reputation, uphold academic standards, and support researchers in navigating an increasingly complex publishing landscape.

Contact us today to explore how Enago can support your institution’s publication excellence.