Crediting ChatGPT as a Co-author: Is it ethical, let alone acceptable?

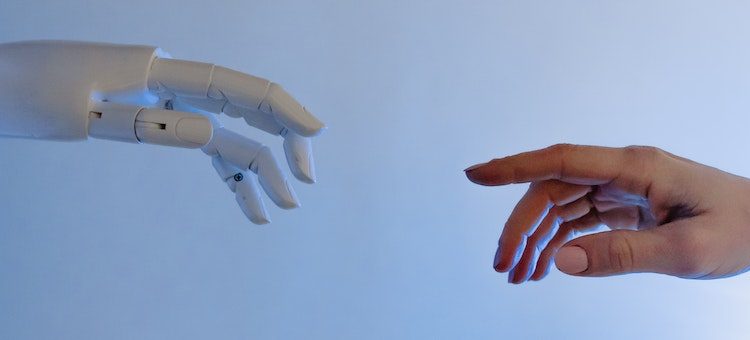

Since its inception, ChatGPT has buzzed the internet drawing attention to the increasingly sophisticated capabilities of artificial intelligence (AI) writing tools. AI tools can provide valuable assistance in the research and writing processes; however, a burning question is whether or not they can replace humans as authors.

While academia was still exploring how AI tools can be employed, Nature recently reported ChatGPT being recognized as a co-author in four academic documents marking its formal debut in the scientific publishing realm. AI tools such as ChatGPT and Large Language Models are increasingly being employed in research papers, COPE: Committee on Publication Ethics, along with other organizations such as WAME (World Association of Medical Editors) and the JAMA (Journal of the American Medical Association) Network, emphasizes that AI tools should not be recognized as authors of an article. As scientists object, journal editors, researchers, and publishers are now debating the position of such AI tools in the published literature, and whether it’s appropriate to cite the bot as an author. Publishers are scrambling to develop policies defining the role and responsibilities of such tools.

AI technologies have proven to be extremely beneficial but they are not without restrictions. To create output, AI systems rely on patterns and data sets. This implies they are only as good as the data on which they are trained. If the data utilized to train the AI tool is biased or defective, the tool’s output will reflect those biases and defects. This has major consequences for the accuracy and dependability of study findings.

Well, not denying the role of AI in simplifying many things such as answering questions, summarizing text, drafting emails, and even engaging in witty banter. Yet, let’s face it when it comes to proofreading, editing, and preparing a manuscript for publication, ChatGPT is about as efficient as a fish trying to climb a tree.

Undoubtedly, ChatGPT has access to massive amounts of data and can detect basic writing errors such as spelling and grammar mistakes. However, when it comes to identifying nuanced errors like style inconsistencies, inappropriate wording, or wrong punctuation, it is a blindfolded kid playing darts. In conclusion, while ChatGPT is a terrific tool for producing content and providing basic input, it’s preferable to leave it to human experts to get your paper publication-ready.

There’s also the difficulty of comprehending context and the author’s intent. ChatGPT may offer revisions that are utterly off-target, leaving the manuscript seeming like it was produced by a robot. Furthermore, it is critical to address the possible impact of over-reliance on AI technologies on the academic community. If AI technologies become widely available and employed in the research and writing processes, it may result in the homogeneity of academic output.

Finally, there’s the important question of whether AI tools can ever truly be considered “authors” in their own right. While it’s highly feasible to utilize AI tools to generate text, art, and other creative works, it’s unnecessary if the output produced by these techniques can be regarded “original”.

Let’s dissect it. When we discuss authorship, we are referring to the obligation and accountability that comes with listing your name on a piece of work. An AI tool cannot accept responsibility for the material submitted to a journal for publishing. It lacks a conscience, a feeling of right and wrong, and a personal stake in the work’s outcome. Therefore, in terms of authorship, it’s completely out of the race.

But, it is not only about accepting responsibility for one’s job. Conflicts of interest, as well as copyright and licensing agreements, are key factors in a publication journey. ChatGPT, being a non-legal entity, cannot declare the presence or absence of conflicts of interest. Furthermore, managing copyright and license agreements is also out of the question. These are the things that require human comprehension, interpretation, and decision-making.

So, what does this mean for authors who use AI tools in the writing of a manuscript, or production of images or graphical elements of the paper, or the collection and analysis of data? Well, it means they have to be transparent in disclosing in the Materials and Methods (or similar section) of the paper — which AI tool was used and how it was used. This is necessary because it allows readers and reviewers to understand the extent of the AI tool’s involvement in the work and its utilization. Also, in the context of the content of a manuscript, the onus still lies with you as a human author for sections developed with the assistance of an AI tool. You are liable for any breach of publication ethics. It’s your reputation at stake here!

The takeaway from this? For starters, it’s critical to be transparent about how AI tools are utilized in academic and publishing processes. It’s also crucial to remember that authors are ultimately accountable for the substance of their work, even if AI tools assist them in creating it. Finally, we must be cautious about the consequences of over-reliance on AI technologies. We cannot let technology replace human judgment and decision-making. Authorship ethics are complicated and nuanced. ChatGPT or any other AI tool, may not be able to match the standards for authorship in research, but it can certainly break the writing block for many.