Maintaining the Ethical Boundaries of Generative AI in Research and Scholarly Writing

Generative AI models have revolutionized the way we interact with technology for research and scholarly writing. As these AI systems become increasingly sophisticated in generating human-like text, questions arise about their ethical boundaries, particularly in the area of scientific writing. However, the responsible use of generative AI in scholarly writing depends on establishing clear guidelines, protocols, and frameworks that ensure compliance of these ethical boundaries set to maintain research integrity.

Integrating generative AI assistance into the scientific writing process should be guided by a commitment to transparency, integrity, and accuracy. Therefore, institutions, journals, and researchers must collectively address the intricacies of authorship, citation, and accountability in the age of AI-generated content. This article explores the ethical considerations surrounding the use of generative AI in scientific writing and the importance of striking the right balance to ensure its responsible use.

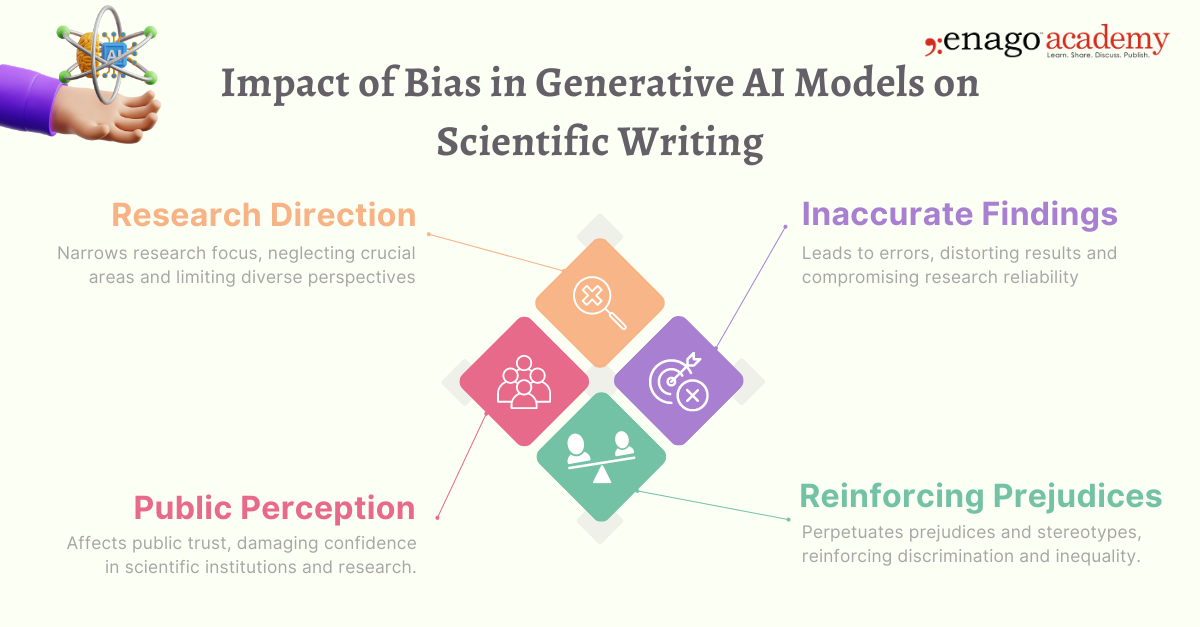

The Impact of Bias in Generative AI Models on Scientific Writing

Bias in generative AI models can have significant implications for scientific writing and research. Therefore, when these models are trained on biased datasets, the generated content may reflect and perpetuate those biases, thereby leading to skewed conclusions and misleading information. Here are some of the ways bias in generative AI can impact scientific writing:

1. Research Direction

AI-generated content influenced by bias might lead researchers to focus on specific topics; thus neglecting other critical areas of research, and limiting the exploration of diverse perspectives.

2. Inaccurate Findings

Bias in AI-generated content may introduce inaccuracies and misinterpretations, potentially leading to flawed scientific findings and unreliable research outcomes.

3. Reinforcing Prejudices

AI-generated content that reflects societal biases can reinforce existing prejudices and stereotypes, contributing to systemic discrimination and inequality in research.

4. Public Perception

If AI-generated content is biased, the public’s perception of scientific research could be negatively impacted; thereby eroding trust in scientific institutions and their findings.

How to Ensure Fair Use of Generative AI Tools

Generative AI tools have revolutionized content generation, providing writers and researchers with efficient ways to create. However, with great power comes great responsibility. As users of these advanced tools, ensuring fairness in their deployment is paramount.

Here are practical strategies writers and researchers can adopt to guarantee fair use of generative AI:

1. Continuous Monitoring for Bias

Regularly assess the outputs for any potential bias. Furthermore, integrating bias detection tools or conducting manual checks helps identify and correct biased content promptly.

2. Fact-checking and Validation

Cross-reference the AI-generated content with reliable sources to validate accuracy and authenticity. Also, fact-checking remains a crucial step in ensuring the credibility of your work. Moreover, researchers should critically evaluate the content before including it in scientific publications to catch any potential biases or inaccuracies.

3. Human Oversight and Editing

Review and edit AI-generated content to ensure accuracy, relevance, and coherence. Human intervention adds a layer of quality control that AI might lack.

4. Transparency in Attribution

Clearly attribute AI-generated sections of your content while crediting your own contributions. This ensures that the originality of your work is maintained and readers can differentiate between human and AI input.

5. Contextual Understanding

Generative AI may lack a nuanced understanding of context. Therefore, as a writer, provide contextual cues, explanations, or interpretations to ensure that AI-generated content aligns with your intended message.

6. Stay Informed

Keep yourself updated on the latest developments in AI ethics and responsible usage. Also, engage in discussions, attend workshops, and stay connected with the broader AI community.

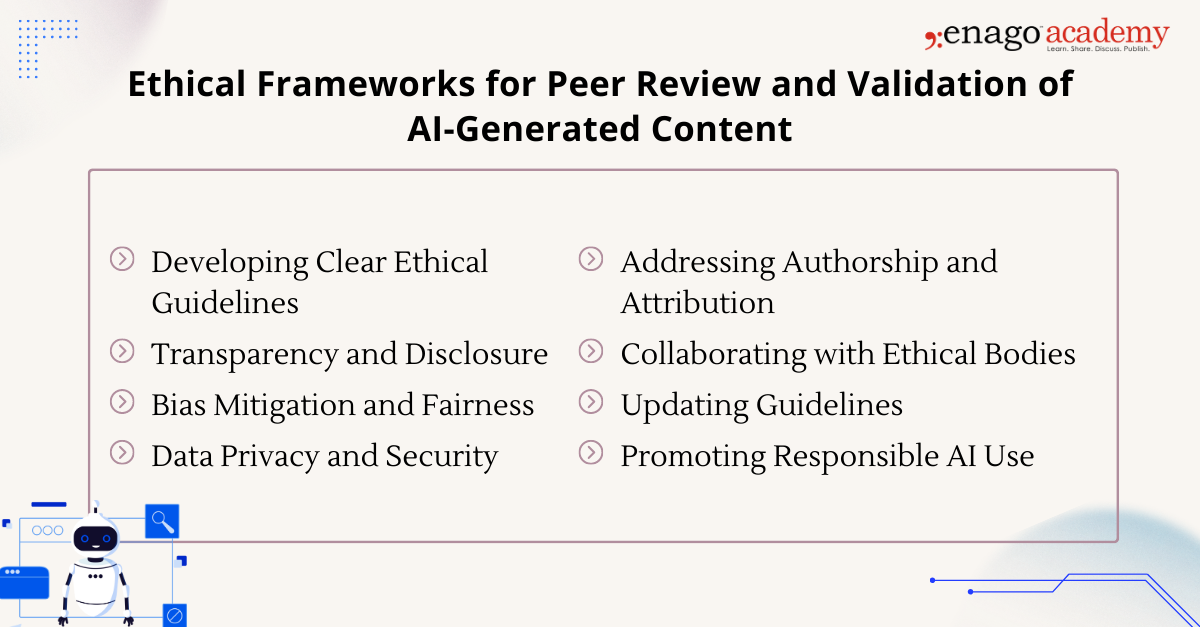

Establishing Ethical Frameworks for Peer Review and Validation of AI-generated Content

The role of publishers, journals, and institutions in peer review and validation processes for AI-generated content is pivotal in ensuring the responsible and ethical use of AI in scientific research and writing. Also, as AI continues to play an increasing role in content generation, it becomes crucial to uphold rigorous standards and ethical considerations. Here’s the role of these entities in establishing ethical frameworks:

1. Developing Clear Ethical Guidelines

Publishers, journals, and institutions are responsible for developing clear and comprehensive ethical guidelines that cover various aspects of research and publication. Also, these guidelines address issues such as bias, privacy, data security, authorship, transparency, and the responsible use of AI models.

2. Transparency and Disclosure

Ethical frameworks emphasize the importance of transparently disclosing the use of AI in research. Also, authors are required to provide details about the AI models, training data, and potential limitations of AI-generated content.

3. Bias Mitigation and Fairness

Ethical frameworks stress the need to address biases in AI-generated content. Additionally, publishers, journals, and institutions implement strategies to minimize biased outputs and ensure fairness in research outcomes.

4. Data Privacy and Security

Institutions and publishers ensure that AI-generated content adheres to data privacy regulations, protecting sensitive information used in AI models.

5. Addressing Authorship and Attribution

Guidelines define how AI-generated contributions should be credited while acknowledging the roles of human authors in the research.

6. Collaborating with Ethical Bodies

Publishers and institutions may collaborate with organizations like the Committee on Publication Ethics (COPE) and the World Association of Medical Editors (WAME) to align with established ethical guidelines and participate in ethical discussions.

7. Updating Guidelines

As technology and research practices evolve, publishers and institutions regularly review and update ethical guidelines to address emerging ethical challenges, such as AI-generated content.

8. Promoting Responsible AI Use

With the growing use of AI in research and publication, publishers and institutions encourage responsible AI practices, including transparency in AI use and addressing biases in AI-generated content.

Key Takeaway

The emergence of generative AI has revolutionized scientific content generation, offering great potential for accelerating research and expanding knowledge. However, it also introduces ethical concerns that must be carefully addressed to preserve the integrity and credibility of scientific writing. Also, the impact of bias, privacy and data security, and the challenge of maintaining transparency and accountability are critical considerations in the use of AI for generating scientific content.

Therefore, to navigate these ethical challenges, researchers, publishers, journals, and institutions must collaborate to establish robust ethical frameworks and guidelines. Moreover, rigorous peer review and validation processes for AI-generated content are paramount in ensuring accuracy, reliability, and responsible use. Ultimately, striking the right balance between technological advancements and ethical considerations will be essential to ensure that AI remains a valuable ally in the scientific writing process while preserving the essence of scientific integrity.

As a researcher, how do you envision leveraging generative AI tools in your work responsibly? What ethical considerations would you prioritize to ensure the credibility and integrity of your research outputs? Share your thoughts and ideas with fellow researchers on the Enago Academy Open Platform, and let’s shape a future where AI and human ingenuity work hand in hand for transformative academic writing!

Frequently Asked Questions

Ethical concerns in AI include bias and fairness, privacy and data security, accountability and transparency, autonomy in decision-making, and intellectual property and copyright. Addressing these ensures responsible AI development and deployment.

The main risks of generative AI include bias amplification, misinformation, intellectual property issues, data privacy concerns, unintended content, creativity loss, job displacement, and ethical dilemmas. Mitigating these risks requires careful development, thorough testing, transparent practices, and ongoing ethical considerations in the use of generative AI.

AI ethics is crucial because it ensures that artificial intelligence technologies are developed, deployed, and used in ways that align with human values, fairness, transparency, accountability, and societal well-being. Without AI ethics, there's a risk of biased outcomes, privacy violations, discrimination, and unintended negative consequences.