Call for Responsible Use of Generative AI in Academia: The battle between rising popularity and persistent concerns

Generative AI is a fascinating and complex landscape, but it is not without its inherent risks and challenges. It entails some of the biggest concerns such as bias, user competency, transparency, and predictability. This calls for responsible and ethical use of this technology while acknowledging the positive impact that generative-AI can have on advancing knowledge and driving innovation in academia.

The Impact Generative AI Tools Can Have on Advancing Knowledge and Driving Innovation

Generative AI tools have the potential to identify patterns and generate insights that would otherwise be time-consuming for human researchers to uncover.

They can assist in data analysis, article summarization, and even contribute to the development of new theories and hypotheses. By leveraging AI, researchers can accelerate the pace of discovery and make significant contributions to their respective disciplines.

However, alongside the benefits, it is crucial to emphasize the threats that arise from the integration of generative AI without human intervention.

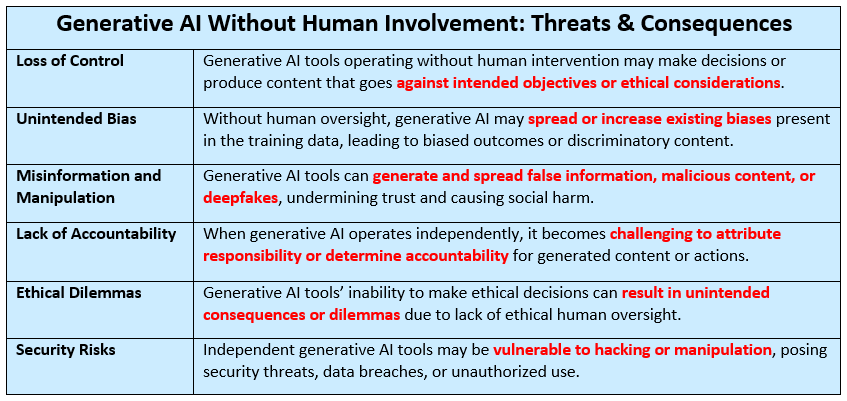

The Threats of Generative AI Integration Without Human Intervention

The deployment of generative AI without proper human intervention can lead to challenges and potential threats that may negatively impact ethical considerations and societal well-being.

The transformative potential of generative AI tools in academia when coupled with responsible human practices can drive innovation and advance knowledge in extraordinary ways.

By establishing guidelines, ensuring diversity and inclusivity in data, and promoting human-AI collaboration, academia can harness the full potential of AI while minimizing its risks. Responsible use of generative AI will empower researchers and educators to make ground-breaking discoveries and contribute positively to the advancement of knowledge.

Ethical Considerations in Integrating Generative AI into Academic Research and Writing

Ethical considerations surrounding data usage, intellectual property, and potential job displacement are of great importance when integrating AI into academic research and writing. Addressing these concerns is crucial for ensuring responsible and ethical use of AI tools in academia.

1- Data Usage

- Ethical practices demand diverse, inclusive, and unbiased data collection.

- Biased or incomplete data can lead to discriminatory outcomes and reinforce inequalities.

- Additionally, avoiding biased datasets is crucial to ensure fair and representative AI-generated insights.

2- Intellectual Property

- Proper attribution and respect for intellectual property rights are vital.

- AI-generated content raises concerns of plagiarism and copyright infringement.

- Clear guidelines and protocols are needed to navigate the intersection of AI-generated content and intellectual property, promoting ethical practices.

3- Potential Job Displacement

- Automation of tasks through AI tools may lead to job losses in academia.

- Reskilling and upskilling efforts are necessary to work effectively alongside AI tools.

- Ethical considerations call for investment in education and training programs to empower individuals and minimize the negative impact of job displacement.

4- Social and Economic Impact

- In addition to the immediate concerns of job displacement, it is crucial to address the wider societal implications that arise from the increasing automation of tasks through AI, proactive measures to mitigate the potential negative consequences are essential.

- Policies and frameworks should support affected individuals and communities.

- A just and equitable transition requires proactive measures to minimize negative consequences and promote inclusivity.

By acknowledging and addressing these ethical considerations, academia can ensure the responsible and ethical use of generative AI tools in research and writing, fostering fairness, inclusivity, and the advancement of knowledge.

Risks of Overreliance on Generative AI and the Potential Impact on Critical Thinking and Creativity

Overreliance on AI tools in academic research and writing poses risks that can impact critical thinking and creativity. While AI tools offer efficiency and automation, they are not a substitute for human intelligence and intuition, and their limitations can hinder the development of innovative ideas and intellectual growth.

Therefore, overreliance on generative AI tools in academic research and writing carries risks that can impact severely on researchers’ critical thinking and creativity. To ensure a well-rounded and innovative approach, researchers must actively engage with AI-generated insights. Furthermore, they should foster creativity and remain cognizant of the limitations and biases inherent in these tools.

Responsible use of generative AI tools such as ChatGPT, DeepL Write, Bard, and so on in academia holds the promise of taking research and writing to new heights. This enables researchers to make ground-breaking contributions to their respective fields and pushing the boundaries of human knowledge.

If you’ve had experience with generative AI tools in your academic work, we invite you to share your insights with the academic community by commenting below or contribute an opinion piece on the Enago Academy Open Platform, connecting you with over 1000K+ researchers and academicians worldwide.