Skewed Citations Affecting Journal Impact Factor – Need for tailoring journal evaluation metrics across disciplines

The impact factor has been the gold standard metric for evaluating the relative importance and influence of academic journals. Calculated by taking the number of citations to a journal over a two-year period and dividing by the total citable items published, it provides a simple numerical score that has shaped institutional subscriptions, researcher choices on where to publish, and even hiring and promotion decisions. However, this seemingly straightforward calculation masks a significant distortion that calls into question how accurately the impact factor truly represents a journal’s impact – the prevalence of skewed citation distributions, especially for diverse disciplines.

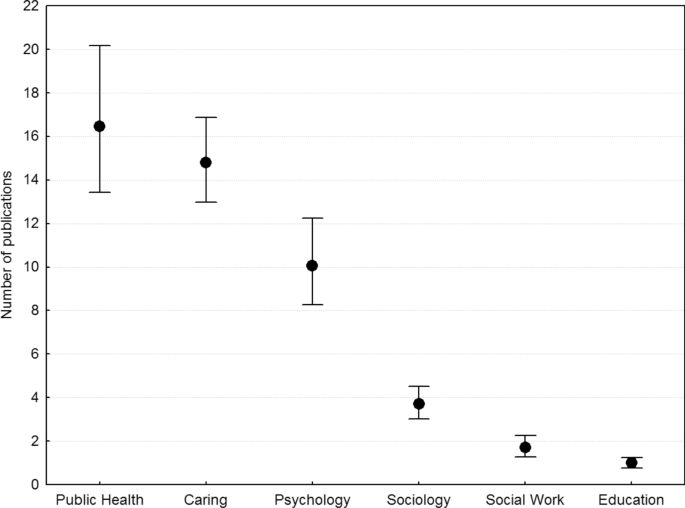

According to a paper published in Springer Nature, the public health discipline is reported to receive the highest number of citations.

Source: Springer Nature

From the core sciences performing cutting-edge experiment to the humanities engaged in nuanced philosophical discourse, each field has its own norms, practices, and culture that shape the research process and dissemination of findings.

Yet despite this diversity, the traditional journal impact factor (JIF) metric aims to evaluate all academic journals through the same narrow lens. While perhaps a reasonable shorthand for disciplines like molecular biology where references accumulate quickly, this approach falls short for many other fields of study. Scholarly work in the humanities, for example, can take years before being cited as ideas percolate gradually. Additionally, in theoretical mathematics or philosophy, the mere advancement of a new theoretical framework can represent ground-breaking impact – regardless of the references accompanying it.

Addressing the Skewed Distribution of Citation Patterns

Ideally, the citation counts for papers published in a journal would follow a relatively normal distribution pattern. However, a few standout papers receive an unusually high number of citations, but the majority cluster around the mean, with a similar number slightly above and below that average. When this holds true, the impact factor acts as a reasonable proxy for the overall influence and readership of a journal.

But what happens when citation distributions are heavily skewed? When a small handful of papers receive a disproportionately large number of citations compared to the rest of the journal’s output? In this case, those few outlier papers have the ability to single-handedly inflate a journal’s impact factor far beyond what is representative of the typical readership and impact of papers published in that journal.

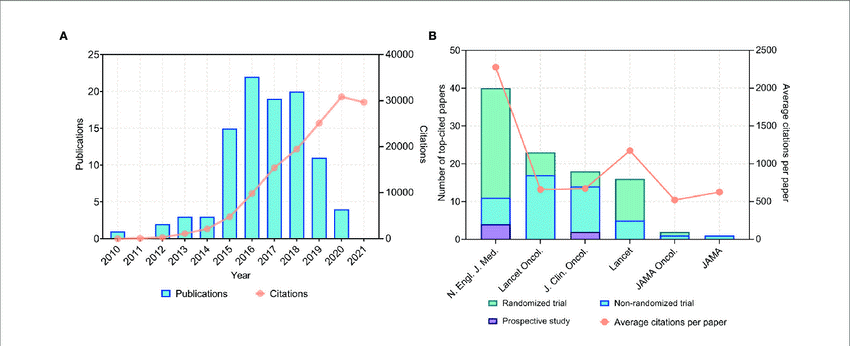

Source: ResearchGate

One of the fundamental challenges in journal evaluation lies in the diverse citation patterns exhibited across academic disciplines. The arts and humanities, with their profound emphasis on deep intellectual exploration, often witness lower and slower citation rates compared to the rapid and dynamic nature of the sciences and social sciences. Understanding and acknowledging these variations is crucial for developing evaluation metrics that capture the essence of scholarly impact within each field.

As the scholarly world becomes more inclusive, persisting with a one-size-fits-all approach not only disrespects disciplinary distinctions — it actively harms certain branches of knowledge by undervaluing their work.

Hence, it is imperative to ideate and implement innovative journal metrics that embrace disciplinary diversity. As of now, multidimensional approaches like the EigenfactorTM Score and the CiteScoreTM aim to go beyond just citations to incorporate other indicators of influence. Additionally, the Source Normalized Impact per Paper (SNIP) also aims to correct for skewed distributions.

Adding to this, the recent transformations in the Journal Citation Reports (JCR) by Clarivate exemplify a progressive move towards acknowledging and addressing these challenges further on. The inclusion of profile pages for arts and humanities journals, the introduction of the Journal Citation Indicator (JCI) for cross-disciplinary comparisons, and the extension of the Journal Impact Factor (JIF) to cover a broader spectrum of quality journals signify a nuanced understanding of the scholarly discourse.

By moving beyond a simplistic, citation-only model, a new era of journal metrics can finally acknowledge and appreciate the sublime multiplicity across all of academia. This will enable nuanced evaluations will respect the unique impact pathways of each branch of knowledge.

What We Recommend: A unified vision for diversity in deciding journal impact

The complexities of journal evaluation across disciplines call for an exit from rigid metrics. By acknowledging the varied citation patterns, speeds, and scholarly nuances inherent to each discipline, and by tailoring evaluation approaches accordingly, we can foster a more inclusive and accurate representation of academic excellence.

The future of inclusive scholarly publishing demands ongoing collaboration and dialogue, recognizing that the best solutions arise from a collective understanding of the unique challenges and opportunities that each field presents.

Ultimately, whether advocating for new metrics or a more nuanced interpretation of the classic impact factor, the key is recognizing that skewed distributions have the power to distort and misrepresent. A more complete, contextualized view of journal performance is needed — one that can ensure accurately measuring and evaluating the influence of academic research.

For the sake of defending the diverse spectrum of knowledge, we must embrace new journal metrics befitting of how impact manifests across disciplines. The path forward requires nothing less.

So the questions that we should be asking ourselves are;

- How do we construct a system that honors the unique rhythms and contributions of each academic discipline without succumbing to the pitfalls of oversimplification?

- In what ways can emerging metrics capture the multifaceted nature of impact, beyond citations and immediate academic recognition?

- And perhaps most crucially, how do we ensure that this evolution towards a more nuanced evaluation system fosters equity, encouraging voices from all corners of the academic spectrum to be heard and valued?

So on point. More metrics, not just JIF but JCR and for measuring the level of excellence of a journal and it’s output.

“As the scholarly world becomes more inclusive, persisting with a one-size-fits-all approach not only disrespects disciplinary distinctions — it actively harms certain branches of knowledge by undervaluing their work,” a call for moving beyond citation metrics for measuring the level of excellence of a journal and it’s output.

So on point!

“As the scholarly world becomes more inclusive, persisting with a one-size-fits-all approach not only disrespects disciplinary distinctions — it actively harms certain branches of knowledge by undervaluing their work…..”

A call for moving beyond citation metrics for measuring the level of excellence of a journal and it’s output.