Redefining Academic Publishing: Discussing a new metric for time from first submission to acceptance

Publishing research has been a necessary component of academic research; it has long enabled scholars to share their findings and advance the boundaries of human knowledge. Yet, this vital process of research dissemination is not a smooth journey for most researchers, even after their manuscript has been drafted. Most scholars point to the length of the journal peer review process as a major barrier to speedy publication. While traditional journal metrics like average time to first decision and average time from acceptance to publication have been used to gauge publishing efficiency, they often fall short of capturing the true timeline from manuscript submission to acceptance.

The current system of publishing has worked fairly well for centuries, but as volumes of publications grow exponentially, authors experience prolonged submission-to-acceptance timelines. This can be attributed to various factors, such as low reviewer availability, the extent of revisions required based on reviewer feedback, and so on. At times, administrative bottlenecks at journals due to increasing demand have also led to delayed start of the peer review process. While frustrating in and of themselves, all these factors have been known and studied in the last decade with great focus.

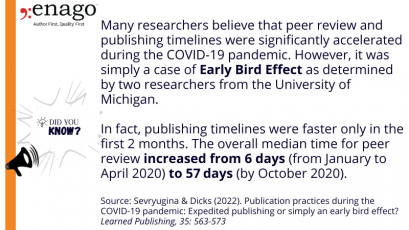

For example, Huisman & Smits (2017) led out a detailed review of the publishing timelines in their Scientometrics paper, highlighting the need for managing both the editorial and peer review timelines as well as setting the right author expectations from the process.

For example, Huisman & Smits (2017) led out a detailed review of the publishing timelines in their Scientometrics paper, highlighting the need for managing both the editorial and peer review timelines as well as setting the right author expectations from the process.

So how can publishers meet or even exceed author expectations?

Reports indicate that medicine typically has the lowest timelines, followed by natural sciences and lastly by economics, and social sciences. Interestingly, medicine and natural sciences also have a higher number of submissions and journals than humanities and social sciences. Despite the access to large datasets, the current journal metrics do not present the right data to the authors that can help them determine the speed of publication. The journal metrics adopted by a majority of publishers focus on two aspects that are within the publisher’s control:

- Average Time to First Decision: This journal metric measures the average time it takes for an editorial desk-check to provide an initial decision (e.g., reject, or send to peer review).

- Average Time from Acceptance to Publication: This metric calculates the average time for a journal’s production processes.

While these journal metrics offer valuable insights into publishing efficiency, they have notable limitations:

- Average Time to First Decision may not account for delays due to manuscripts being returned to authors for corrections (such as the addition of missing documents or information). There is no publication of these statistics by any major publisher.

- In the last two years, there has been an increase in journals being unable to find peer reviewers. This surely significantly increases the timelines for outliers – another statistic that is not published by journals.

- Lastly, but most importantly, it does not identify either the time for review or the actual time from a manuscript’s first submission till its acceptance. This is of course presuming that the manuscript is accepted by the first journal it’s submitted to.

Recognizing the limitations discussed above, Elsevier has already begun to embrace a more nuanced and accurate measurement of Time to Peer Review. Unsurprisingly, this metric however is not yet available for all journals. Additionally, in their Journal Finder service, they do publish other two traditional journal metrics but not Peer Review time, even if it is available.

Meanwhile, the data available with publishers and the field of data science itself has evolved remarkably. An industry-wide publication of the Time to Peer Review metric can facilitate better decision-making for authors in the journal selection process. It can also set the right expectations about possible publication timelines, improving the overall author experience.

Publishers should Define the Concept of Time from First Submission to Acceptance

Over the last decade, the academic publishing industry itself has been undergoing a noticeable shift in perception towards peer review. Third-party stakeholders are entering the industry with products designed to facilitate more timely peer review, such as Science Colab, Peer Community In, PREreview, etc. From the increased number of pre-prints to emerging models of peer review (collaborative or community peer review), there is a heightened interest in improving author experience in the publishing process. However, the effectiveness of new technologies and collaborative efforts is debatable unless we adopt a journal metric that is a clear indicator of the actual time taken for a paper to be published.

A new metric, Time from First Submission to Acceptance, will not only account for the journal review time but also factor in author revisions, editorial decisions, and any administrative delays that may occur along the way. By incorporating these crucial components, this metric will offer a more accurate reflection of the publication process’s complexity. It will take into account the entire timeline from the moment an author submits their manuscript to when it is finally published online.

Moreover, it has distinct benefits for all participants of the publishing cycle – authors are provided a more transparent view of the publication timeline, while reviewers and editors get a clearer understanding of their roles in managing the timelines. Journals and publishers can streamline their operations, improve author experience, and optimize resource allocation by analyzing the different components of this metric. Quite definitely, the publication of this metric has the potential to enhance collaboration, accelerate scientific discoveries, and bring about a positive transformation in how scholarly knowledge is disseminated.

Call for Industry-wide Implementation

While manual data collection has been a hindrance in the past, available technologies have evolved to a great degree and access to data itself or analytics is not quite a strong barrier anymore. However, while the advantages are clear, other challenges could hinder the implementation of this measurement. Here are some viewpoints:

1. Enhancing Journal Selection:

The availability of Time from First Submission to Acceptance metrics can aid researchers in choosing journals that align with their publication timeline preferences, leading to more strategic and efficient manuscript submissions.

2. Transparent Data Sharing:

Journals and publishers should share anonymized data proactively. This practice can promote transparency and foster healthy competition among publishers, ultimately benefiting authors and the research community.

3. Balancing Competition and Collaboration:

An industry-wide commitment to publishing this metric, even if it reduces the appeal of a portfolio of journals, is crucial. Particularly in medicine and natural sciences, where journal options are present for a niche subject, it is imperative for publishers to adopt a unified approach. While strong competition can exist among journals, collaborative efforts to establish industry standards for this metric can strike a balance between healthy competition and the collective goal of improving the publishing process.

4. Privacy and Ethics:

When collecting data, issues related to author and reviewer privacy must be addressed. Anonymizing data and obtaining informed consent are important ethical considerations.

5. Standardization of Journal Metrics:

Establishing standardized definitions and methodologies for measuring this metric is essential to ensure consistency across journals and publishers.

6. Aligning with Open Science Principles:

The adoption of this metric aligns with the principles of open science by promoting transparency, collaboration, and data sharing, ultimately advancing the progress of scientific knowledge.

7. Promoting Reader Confidence:

Standardization not only ensures consistency but also promotes reader confidence in the accuracy and reliability of the Time from First Submission to Acceptance metric, which is essential for informed decision-making.

8. Balancing Portfolio Appeal:

While the adoption of this metric may impact the appeal of certain journals, it can also incentivize journals to optimize their processes, ultimately benefiting authors and the research community. Using third-party products should expedite this significantly.

In our opinion, measuring Time from First Submission to Acceptance represents a marked paradigm shift in how we evaluate and improve publishing efficiency, transparently. While traditional metrics have their place, this metric offers a more comprehensive and accurate assessment of the entire publishing timeline. By embracing this metric, the publishing community should facilitate collaboration, accelerate timelines, and ultimately serve the research community more effectively and efficiently. Let’s discuss this topic until we find a solution—it will only benefit all of us.