AI Integration Can Future-Proof Peer Review — But only if we define and drive the right principles

In this ever-evolving landscape of scientific research, trust in the integrity, validity, and impartiality of the process of disseminating scientific findings is essential for the progress of humanity. Scholars, researchers, institutions, and consumers of research have long relied on the peer review step as the gold standard for ensuring the quality and credibility of research publications. The five core elements of peer review are identified. Constitutive elements of scholarly peer review include: fairness in critical analysis of manuscripts; the selection of appropriate reviewers with relevant expertise; identifiable, publicly accountable reviewers; timely reviews, and helpful critical commentary.

However, as is known, the traditional peer review process is not without its challenges. Among several issues, it has faced criticism for its potential biases, lack of transparency, and the time it takes to publish research. The fact that it is completely a human process also makes it difficult to standardize and super-efficient.

Through this article, I want to specifically address this question – can AI help address these issues without compromising the gold standard?

Let’s First Take a Quick Peek into the Evolution of the Peer Review Process

Peer review has a history dating back to the 17th century when the Royal Society of London initiated the practice to evaluate scientific manuscripts. Over the centuries, it has become the heart of scholarly publishing, a process through which experts in a field review and assess research papers before publication. Its purpose is to ensure the quality and validity of research, identify errors or methodological flaws, and provide constructive feedback to authors to uphold the “Trust in Science”. Traditionally, this process has been carried out by human peers, but the advent of AI has opened up new avenues for enhancing and potentially transforming peer review.

The Promise of AI Tools in Peer Review

So far, we have come across some AI tools that seem promising for making peer review better:

1. Reviewer Matching

AI algorithms can streamline the reviewer selection process by matching manuscripts with suitable experts quickly, ensuring that research is evaluated by individuals with relevant expertise.

2. Efficiency and Speed

AI algorithms can now swiftly analyze and assess research manuscripts based on pre-defined characteristics, significantly reducing the time it takes for papers to be reviewed and published.

3. Objectivity and Bias Mitigation

Although trained on biased data, AI has the potential to mitigate human biases that can creep into the peer review process, such as those related to geography, gender, race, or institutional affiliations.

4. Transparency and Accountability

AI-powered peer review can provide transparent, data-driven evaluations of research manuscripts. Review reports generated by AI algorithms can be made accessible to reviewers and authors, enhancing transparency and accountability in the review process.

5. Identification of Misconduct

AI tools can be programmed to detect anomalies and potential instances of research misconduct, such as plagiarism or data manipulation, with remarkable accuracy. This serves as an additional layer of protection against unethical practices.

Fact Check — Notable AI adoption by publishers

- Nature’s Use of AI: The study led by Michèle Nuijten and Chris Hartgerink deployed the program “Statcheck” to assess statistical inconsistencies in psychological literature. Among 30,717 papers examined, 16,695 that used statistics for hypothesis testing were analyzed, revealing potential errors in half of them. These findings sparked a debate about the utility and ethical implications of automated tools for scrutinizing research. While Statcheck is considered immature and prone to errors itself, it could encourage researchers to be more vigilant about their work. Some see it as a way to maintain scientific integrity, while others caution against potential misuse and distractions from substantive discussions. The program’s adoption by journals and publishers is being explored. Ultimately, the aim is to foster improved transparency and reproducibility in research.

- Elsevier Releases AI Software: In July 2023, Elsevier unveiled an alpha version of Scopus AI, a generative AI tool aimed at helping researchers gain deeper insights quickly. The tool combines AI with Scopus’ content and data, offering easy-to-read topic summaries from over 27,000 academic journals, 7,000 publishers, 1.8 billion citations, and 17 million author profiles. It also provides natural language queries and “Go Deeper Links” for extended exploration, aiming to reduce reading time and the risk of misinformation. Customer testing of Scopus AI is underway, with a complete launch expected in early 2024. Amongst these developments and technological advancements, responsible AI and data privacy are central to Elsevier’s product development efforts.

The Real Concern Is How Much of AI Integration Should Be Allowed

As we contemplate the integration of AI tools into the peer review process, a pivotal question emerges: Even if AI tools may hold “great promise,” do we allow their use in upholding trust and ethics in science through peer review?

Reviewers are expected and trusted upon to uphold confidentiality with respect to the research during the complete review process. Consequently, employing AI to aid in peer review would violate the requirement for confidentiality. Additionally, as per the National Institutes of Health’s (NIH) recent guide notice NOT-OD-22-044 on Maintaining Security and Confidentiality in NIH Peer Review: Rules, Responsibilities and Possible Consequences, scientific peer reviewers are prohibited from using natural language processors (NLP), extensive language models (LLM), or similar generative AI technologies to assess and construct peer review evaluations.

Currently, there seems to be no solution for this problem. But since we have to adapt anyway, can we find some middle ground? I think we can, but it needs ample discussion and collaborative working.

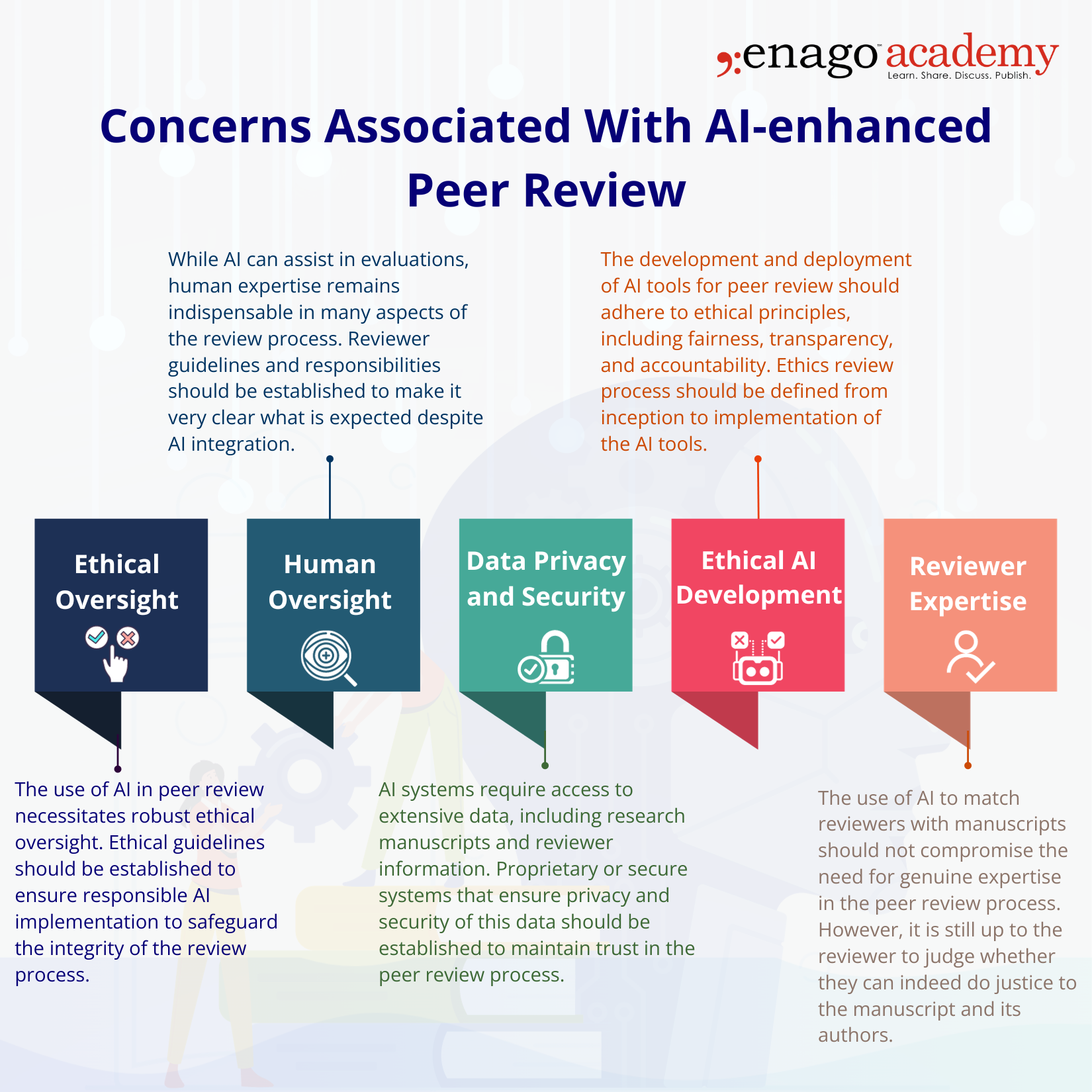

Just as we embrace the promises of AI-enhanced peer review, it is imperative to not overlook these considerations, to name a few:

Some Predictions for the Future of Peer Review and Publishing

Some Predictions for the Future of Peer Review and Publishing

As technology continues to advance and become necessary, the future of peer review looks promising yet complex. Here are some predictions:

1. Open Peer Review

There’s a growing trend toward open peer review, where the identities of authors and reviewers are known to each other. With the right set of guidelines and AI integrations for peer selection, this approach promotes speed, transparency, and accountability in the review process.

2. Acceptance Decision Prediction in Peer Review Through Sentiment Analysis

AI tools can already help identify potential conflicts of interest and generate reports on pre-defined parameters. In addition, sentiment analysis promises to provide the journal editors with the acceptance prediction details, after checking for false positives/negatives.

3. Quality Control

Publishers will be required to invest more in tools, training, and processes to monitor and streamline AI integration at each step in the publication process.

4. Diverse Peer Reviewers

AI can help publishers diversify the pool of peer reviewers after removing potential bias parameters and based on previous acceptance decisions.

5. Post-Publication Review

The traditional model of pre-publication peer review is being complemented by post-publication review. Online platforms allow researchers to comment on published papers, enabling continuous assessment and improvement.

6. Transparent Review Criteria

Review criteria will become more standardized and transparent. Publishers will need to provide improved guidelines to editors and reviewers, helping to improve consistency and fairness.

7. Rapid Review

Some journals have already adopted rapid review models, ensuring quicker decisions for timely dissemination of research. This process may further be expedited by removing human mechanical checks and promoting standardized post-publication or open reviews.

8. Preprint Submissions

Submissions to preprint servers, like arXiv, medRxiv, ChemRxiv, PsyarXiv, and bioRxiv, have significantly increased in the last decade and poised to increase further. Pre-prints peer review will become a norm soon and will require AI inputs for more efficient peer assessments.

9. Interdisciplinary Collaboration

Increasingly, research is crossing disciplinary boundaries. Peer review and publishing need to adapt to accommodate interdisciplinary work. Furthermore, collaboration among publishers, researchers, and institutions will grow further.

Finally…

Trust in science is fundamental to the advancement of knowledge and the betterment of society. As we navigate the AI era, its integration into peer review holds immense promise for preserving scholarly integrity. AI can enhance efficiency, objectivity, transparency, and accountability in the peer review process. However, challenges related to ethics, data privacy, and algorithmic bias will need to be addressed. Clear guidelines and oversight mechanisms must be established to ensure responsible AI use.

The journey toward a future where AI-enhanced peer review is the standard practice requires collaboration, ethical considerations, and a commitment to upholding the principles of responsible research. With the right balance between automation and human expertise, we can usher in an era where trust in science remains unwavering. It is not a question of whether we allow AI tools in upholding trust in science through peer review but how we integrate them thoughtfully and responsibly.