How AI Is Shaping Peer Review in 2025: Key findings from Enago’s global survey

Executive Summary:

Artificial intelligence is rapidly entering the conversation, offering tools for everything, from tone editing to triage and stirring intense debate about its rightful place. We present data gathered from 124 researchers, editors, publishers, and reviewers from diverse disciplines around the world, through an comprehensive and anonymous online survey.

Our survey titled, “What Publishing Stakeholders Really Think About AI-Assisted Peer Review?”, aims to understand AI usage, perceived benefits, ethical concerns, and training needs. Our findings reveal not consensus, but a crossroad: optimism, skepticism, curiosity, and caution all converge at a pivotal moment that may shape research practices for decades. While most see potential in AI-assisted peer review, many lack exposure or remain skeptical.

Key Takeaway:

- For the small but growing number of early adopters, AI use is highly focused on editing and communication improvements.

- Confidentiality emerges as the primary concern among non-users, followed by apprehensions regarding accuracy limitations of AI tools (19%) and their perceived inability to ensure fair judgment (16%).

- Reviewer views on AI remain split. Support for AI-assisted peer review hinges on transparency, oversight, and institutional approval, underscoring the need for broad stakeholder input to guide ethical adoption.

The Big Question: Where does AI fit in peer review?

Most reviewers see promise in AI. Many remain wary. Researchers, editors, publishers, and ethicists everywhere are debating: Will artificial intelligence transform peer review for the better, or pose new risks to the gatekeeping process? Our field stands at a crossroads, and the answer depends on what our community does next.

As global research becomes faster, broader, and more complex, AI tools are being offered as solutions to reviewer overload and bottlenecks. But are these tools ready? Moreover, are we ready to integrate AI into the process?

Peer Review Week gave our community a perfect opportunity to weigh in and these perspective will go a long way in directly shaping the conversation around AI’s role in research and help chart the course for peer review’s next chapter.

How do Reviewers Feel About AI-assisted Peer Review?

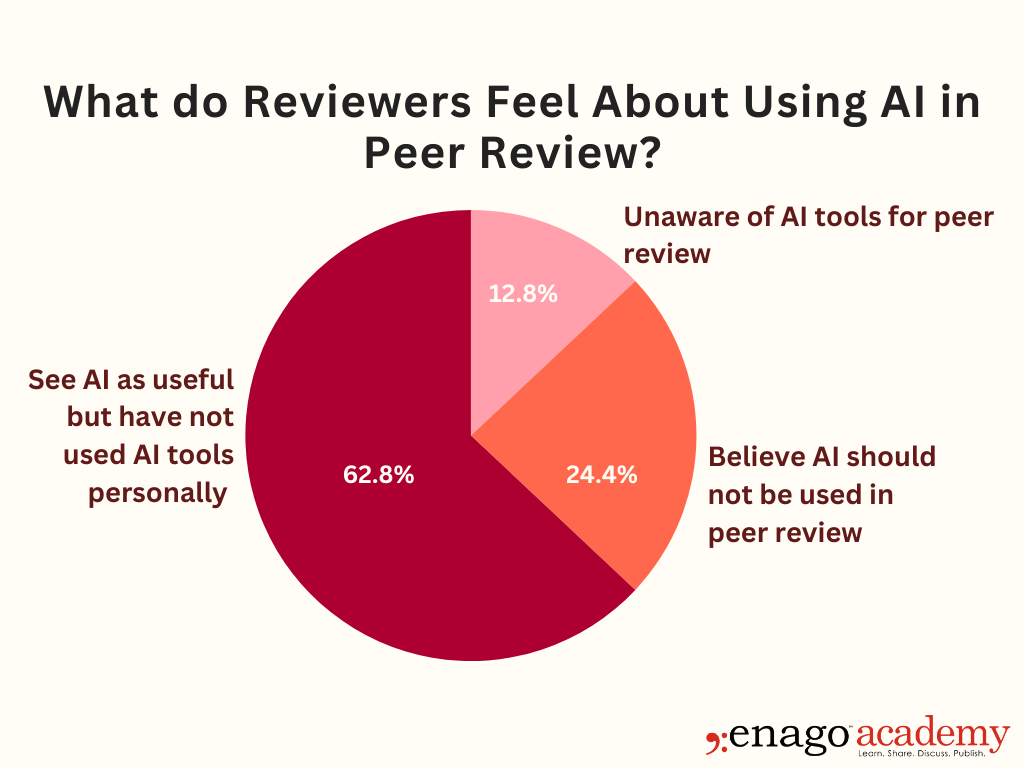

The split is as much about opportunity as opinion. Preliminary findings paint a picture of ambivalence. While nearly 63% of reviewers believe AI could be useful in peer review, they have not yet tried it. Meanwhile, about 24% feel AI simply doesn’t belong in the process, and over 12% are not even aware of relevant tools. In other words, most reviewers while curious tread cautiously, watching and waiting as the peer review landscape continues to shift around them. This measured approach reflects a broader hesitation to fully embrace AI without clearer evidence of its benefits and safeguards.

How are Reviewers Actually Using AI?

Beneath the overarching debate, real adoption is fragmented but evolving in instructive stages. For those experimenting, AI is playing a supportive, not substitutive role. However, the top use cases are strikingly practical:

- 27% have used AI to polish the tone or clarity of review comments, making feedback more constructive.

- 21% use it to assist with literature discovery for navigating vast, complex content more efficiently.

- 18% turn to AI to summarize research papers to make sense of submissions faster.

- While only around 10–11% have tested AI for specialized evaluation tasks like spotting missing references, verifying statistics, or assessing novelty.

What emerges is a pattern: AI is a tool for assistance, not an automation of judgment. Reviewers are letting AI lighten the load, while high-stakes technical checks remain firmly human terrain. Reviewers are most comfortable letting algorithms help clarify language or navigate dense literature but keep human instincts at the core of quality decisions. This reveals a practical caution that deserves broader recognition in policy discussions. Yet, this cautious optimism coexists with clear reservations.

What Holds Reviewers Back?

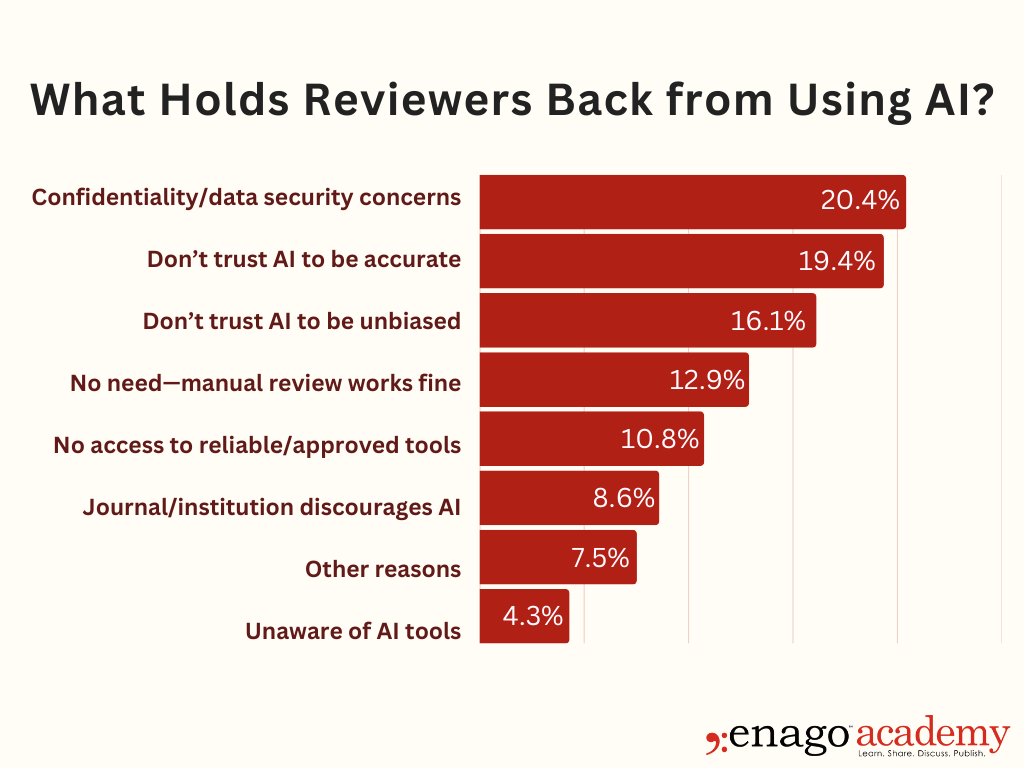

Preliminary findings indicate that respondents who have not yet adopted AI often cite concerns extending beyond technical barriers, highlighting issues that warrant careful consideration.

- 20% worry about confidentiality breaches, “What if uploading a manuscript leaks confidential ideas?” Authors need assurance that their unpublished ideas and data will be protected by strict publisher policies during peer review and editorial processes.

- 19% are unconvinced about accuracy, “Can AI really judge scholarly nuance?”

- 16% question whether it can make fair, unbiased judgments

- 11% lack access to approved or reliable tools

- 9% report that their universities and research institutes actively discourage AI use

Concerns about trust, privacy, and control are not just footnotes, they are the heart of the debate. While 13% reviewers simply don’t feel the need to use AI, the top concerns — confidentiality breaches, bias reinforcing systemic inequities, and the imperative for transparent human oversight — reflect the professional standards that uphold peer review integrity. These issues go beyond surface-level ethics and underscore why adoption remains cautious and contested. Without clear, transparent policies to address these barriers, AI integration will remain partial and fraught with mistrust. This tension leads to ethical gray areas and competing priorities that spark real fascination and friction.

Ethical Gray Areas and Competing Priorities

When reviewers are presented with specific use cases. Their answers highlight just how layered the debate has become and how divided the community remains on issues of disclosure, oversight, and legitimacy:

- Respondents support AI involvement in reviewer selection (44%) or manuscript screening (13%) only if the use is transparent and the tools are approved by the journal.

- AI-generated content (11%) and AI-facilitated language translation (20%) are particularly thorny; most support their use only when disclosure, quality control, and policy approval are guaranteed.

- Concerns about over-reliance and loss of human judgment temper even positive opinions about efficiency gains.

- The “black box” nature of commercial AI systems is worrisome as reviewers may trust AI outputs without accountability. Potentially allowing errors, bias, or flawed recommendations to go unchecked. Thus undermining peer review integrity.

What Truly Motivates Reviewers to Embrace AI Tools?

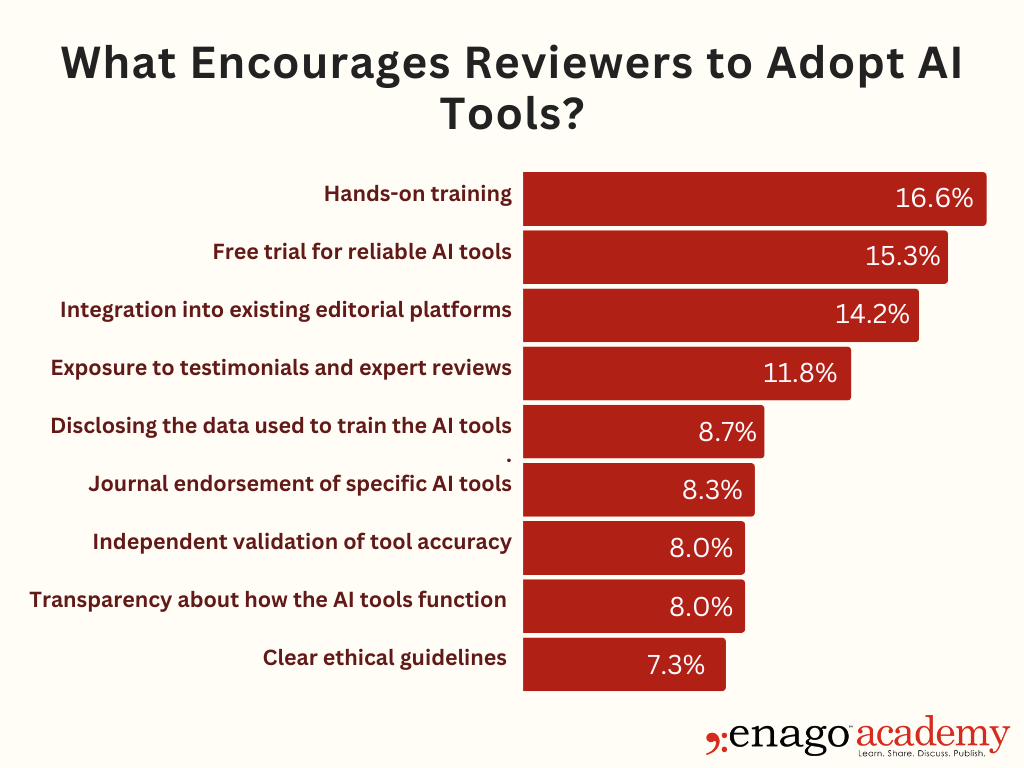

Our analysis shows that reviewers are most motivated to adopt AI when both practicality and trust align. On the practical side, they value hands-on training, free trials, and seamless integration into existing editorial platforms. Social proof also plays a role, peer testimonials (8.3 %) and endorsements from journals or publishers (11.8 %) help build early confidence.

But what truly drives adoption is trust. Reviewers place the highest importance on transparent and accountable AI: alongside disclosure of training data, independent validation of accuracy and fairness (14.2%), clear model explainability (15.3%), and strong ethical guidelines (16.7%) inspire maximum confidence.

Thus, reviewers may try AI because it’s easy to use, but they embrace it when they trust it.

The Bottom Line

The debate around AI in peer review is no longer about if these tools will enter scholarly workflows, but how they should. Our findings show a community that is curious yet cautious, optimistic yet vigilant. Reviewers are open to AI’s potential, especially for tasks that reduce workload and enhance clarity, but they insist on guardrails that preserve the integrity, confidentiality, and fairness of the evaluative process.

As publishers, editors, and institutions move forward, the path to responsible adoption will depend on meaningful stakeholder engagement, transparent governance, and continual evidence that AI can support not supplant human judgment. If the research community gets this balance right, AI can become an asset that strengthens peer review rather than a threat that destabilizes it.

Reviewers see clear value in using AI for efficiency and clarity, but their willingness to adopt these tools ultimately hinges on trust. Trust that confidentiality will be protected, that judgments will remain fair, and that human oversight will not be diluted. The future of AI-assisted peer review will be shaped not by technology alone, but by transparent policies, accountable design, and community-driven standards.